Table of Contents

Urban Dynamic Objects LiDAR Dataset

for the ICRA 2021 publication:

Patrick Pfreundschuh, Hubertus F.C. Hendrikx, Victor Reijgwart, Renaud Dubé, Roland Siegwart, Andrei Cramariuc, “Dynamic Object Aware LiDAR SLAM based on Automatic Generation of Training Data ”, IEEE International Conference on Robotics and Automation (ICRA), 2021.

Dataset Description

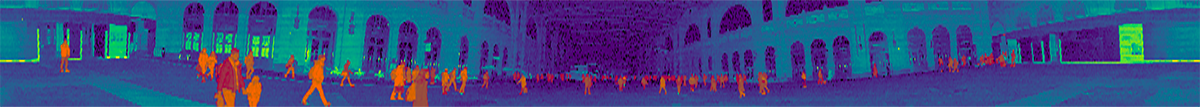

The dataset contains more than 12000 scans that were recorded in the main hall of ETH Zurich (Hauptgebaeude), at two different levels of the main train station in Zurich (Station, Shopville) and in a touristic pedestrian zone (Niederdorf). The point clouds include a magnitude of pedestrians. We provide 2 sequences at each location. The point clouds were recorded using a handheld Ouster OS1 64 (Gen 1) LiDAR at 10 Hz with 2048 points per revolution. We provide globally bundle adjusted high frequency poses of a VI-SLAM pipeline. Trajectory lengths vary between 100 - 400 m and sequences last between 100 - 200s.

For evaluation purposes, a subset of the dataset was manually annotated. Pedestrians and objects associated to them (e.g suitcases, bicycles, dogs) were annotated for 10 temporally separated point clouds for each of the 8 sequences. Pedestrians were annotated by appearance only, thus it was not considered if they are static or moving.

Additionally we provide a simulated sequence of a small town. It contains moving cars, planes, pedestrians, animals, cylinders, spheres and cubes at different sizes that are moving horizontally and vertically at different velocities and in different directions. The simulated sensor is moving in a closed trajectory through the environment. Ground truth poses and annotations for all moving objects for each of the point clouds are available. The simulated LiDAR is similar to the Ouster OS1 64 (Gen 1) LiDAR at 10 Hz with 2048 points per revolution.

Structure of the Dataset

We provide the sequences as rosbag files. The data is published using the following topics:

| Description | Topic | Message Type |

|---|---|---|

| Point Clouds | /os1_cloud_node/points | sensor_msgs/PointCloud2 |

| Transformations | /tf | tf2_msgs/TFMessage |

| Transformations | /T_map_os1_lidar (/T_map_os1_sensor) | geometry_msgs/TransformStamped |

We use the world coordinate frame 'map'. Point Clouds are recorded in the 'os1_lidar' (real world datasets) or 'os1_sensor' (simulated dataset) frame. The respective poses of the lidar frame in world coordinates are provided on the /tf or /T_map_os1_lidar(sensor) topics.

Due to the handheld way the data was recorded, the point clouds are distorted from the ego motion of the sensor. The high frequency poses in the dataset can be used for undistortion.

For each sequence the annotated dynamic objects are provided in a 'indices.csv' file. Each line in the 'indices.csv' starts with the timestamp in ns of the respective point cloud in the bag followed by all point indices annotated as dynamic.