Table of Contents

Dynablox: Real-time Detection of Diverse Dynamic Objects in Complex Environments

This page contains the accompanying data collected for Dynablox, our system for incremental mapping-based detection of diverse dynamic objects in complex environments. The code to run the data and system is available at https://github.com/ethz-asl/dynablox. The paper is available on ArXiv:

Lukas Schmid, Olov Andersson, Aurelio Sulser, Patrick Pfreundschuh, and Roland Siegwart, “Dynablox: Real-time Detection of Diverse Dynamic Objects in Complex Environments”, in IEEE Robotics and Automation Letters (RA-L), Vol. 8, No. 10, pp. 6259 - 6266, 2023.

The Dynablox Dataset

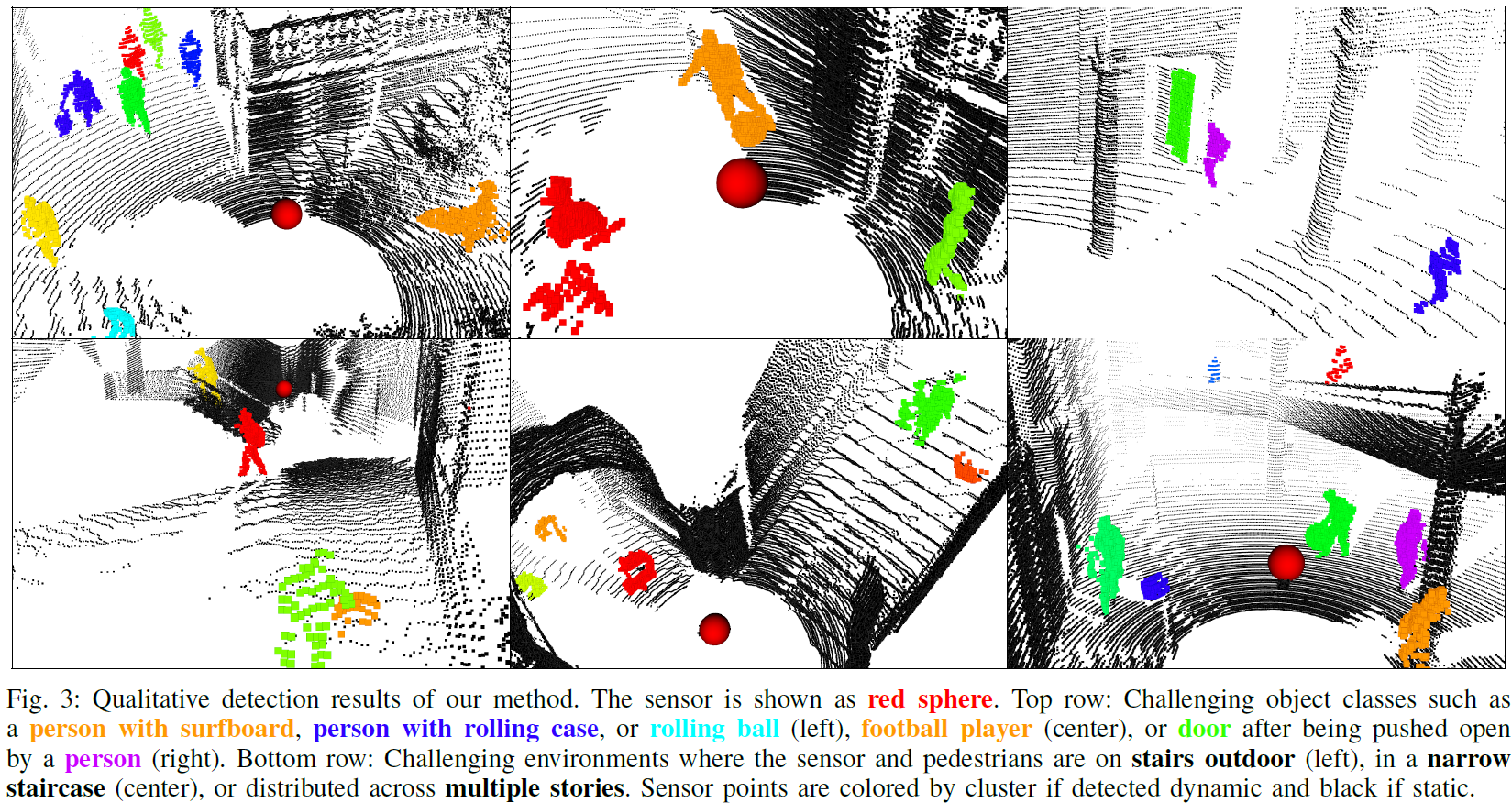

The Dynablox dataset consists of several sequences collected with a 128-beam OS0 high-range high-resolution LiDAR, and features various typical (e.g. pedestrians) and atypical (e.g. balls being tossed our bounced, luggage rollig down ramps, people hidden by large objects such as boxes or surfboards) in complex environments (indoor and outdoor with clutter, stairs, ramps, or multi-level scenes). Several excerpts of the dataset are shown in our accompanying video.

Data Layout

We provide rosbags of 8 sequences:

- corridor_1

- corridor_2

- hg_1

- h_2

- indoor

- ramp_1

- ramp_2

- stairs

Two versions of each bag is available: The archive Raw includes the original raw recorded point clouds, IMU data, and an onboard visual inertial initial state estimate. We further proved an archive Processed, which contains state estimates produced by FAST-LIO2, point clouds that were undistorted based on the state estimates, and only contains pointclouds for which a state estimate is available typically cuts the first second while FAST-LIO2 is ramping up). This data is ready to be processed by Dynablox and is the recommended data source for it. We release the raw bags if you with to apply your own processing on the raw data.